Answer:

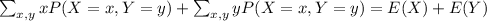

(a)

![E[X+Y]=E[X]+E[Y]](https://img.qammunity.org/2021/formulas/mathematics/high-school/s1kasivvzc6ih2m3aevbto8ozhbmajzbr6.png)

(b)

Explanation:

Let X and Y be discrete random variables and E(X) and Var(X) are the Expected Values and Variance of X respectively.

(a)We want to show that E[X + Y ] = E[X] + E[Y ].

When we have two random variables instead of one, we consider their joint distribution function.

For a function f(X,Y) of discrete variables X and Y, we can define

![E[f(X,Y)]=\sum_(x,y)f(x,y)\cdot P(X=x, Y=y).](https://img.qammunity.org/2021/formulas/mathematics/high-school/h9nya9uabg0hy28ip9tn6qqbqgxo58r9cu.png)

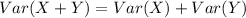

Since f(X,Y)=X+Y

![E[X+Y]=\sum_(x,y)(x+y)P(X=x,Y=y)\\=\sum_(x,y)xP(X=x,Y=y)+\sum_(x,y)yP(X=x,Y=y).](https://img.qammunity.org/2021/formulas/mathematics/high-school/ag31vxotuouabiubhrz6lli2logj70k6uj.png)

Let us look at the first of these sums.

![\sum_(x,y)xP(X=x,Y=y)\\=\sum_(x)x\sum_(y)P(X=x,Y=y)\\\text{Taking Marginal distribution of x}\\=\sum_(x)xP(X=x)=E[X].](https://img.qammunity.org/2021/formulas/mathematics/high-school/sy4xz4n17xprksdjdmr519qd4pusn05h0l.png)

Similarly,

![\sum_(x,y)yP(X=x,Y=y)\\=\sum_(y)y\sum_(x)P(X=x,Y=y)\\\text{Taking Marginal distribution of y}\\=\sum_(y)yP(Y=y)=E[Y].](https://img.qammunity.org/2021/formulas/mathematics/high-school/swqo0364evauj9x5lvg0t4y1khktxzgx3o.png)

Combining these two gives the formula:

Therefore:

![E[X+Y]=E[X]+E[Y] \text{ as required.}](https://img.qammunity.org/2021/formulas/mathematics/high-school/xfzj2n71glgv03f2rgo9xs7yoycuby5w3o.png)

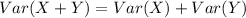

(b)We want to show that if X and Y are independent random variables, then:

By definition of Variance, we have that:

![Var(X+Y)=E(X+Y-E[X+Y]^2)](https://img.qammunity.org/2021/formulas/mathematics/high-school/ntfknyv2szwp30x281gj1avojgf5b6ijs4.png)

![=E[(X-\mu_X +Y- \mu_Y)^2]\\=E[(X-\mu_X)^2 +(Y- \mu_Y)^2+2(X-\mu_X)(Y- \mu_Y)]\\$Since we have shown that expectation is linear$\\=E(X-\mu_X)^2 +E(Y- \mu_Y)^2+2E(X-\mu_X)(Y- \mu_Y)]\\=E[(X-E(X)]^2 +E[Y- E(Y)]^2+2Cov (X,Y)](https://img.qammunity.org/2021/formulas/mathematics/high-school/2pit2auqsvvuv6kztv7h4motj5u1mkuo9d.png)

Since X and Y are independent, Cov(X,Y)=0

Therefore as required: