Answer:

Step-by-step explanation:

There are several ways to solve this using different assumptions.

First you need to imagine an isosceles triangle formed by:

- the equal sides of the triangle are the distance between the Earth and the Moon: 234,000 miles

- the included angle is 30 second of degree

- the base side of the triangle, opposed to the 30 seconds angle, is how far in miles from its assigned target the laser beam is: x

You can solve for x in several ways.

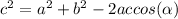

I will use the cosine rule:

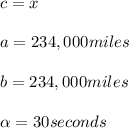

Where:

One second of degree equals 1/3600 degrees:

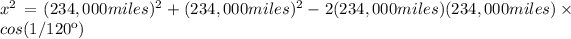

Substitute in the equation and compute: