The period of a satellite that is orbiting the earth is given by,

![T=2\pi\sqrt[]{(r^3)/(GM)}](https://img.qammunity.org/2023/formulas/physics/college/xk4a9utfmvj6lb3hl3qmxk1uzzqgpfh76u.png)

Where G is the gravitational constant, M is the mass of the earth, and r is the radius of the satellite.

Let us assume that the period of the satellite is decreased to half its value.

Then the new period is given by,

![\begin{gathered} T_n=(T)/(2) \\ =(2\pi)/(2)\sqrt[]{(r^3)/(GM)} \end{gathered}](https://img.qammunity.org/2023/formulas/physics/college/1ng2o1ic4bodsi6io8edidrwtjycmte7eu.png)

On simplifying the above equation,

![\begin{gathered} T_n=2\pi\sqrt[]{(r^3)/(4GM)} \\ =2\pi\sqrt[]{\frac{(\frac{r}{\sqrt[3]{4}})^3}{GM}} \\ =2\pi\sqrt[]{(r^3_n)/(GM)} \end{gathered}](https://img.qammunity.org/2023/formulas/physics/college/2wnrmyo0q7jyaoj1cs59nzpv90bspsgshz.png)

Where r_n is the decreased radius of the orbit of the satellite.

The value of r_n is,

![\begin{gathered} r_n=\frac{r}{\sqrt[3]{4}} \\ =0.63r \end{gathered}](https://img.qammunity.org/2023/formulas/physics/college/6gzrigyyt9ifixtq8k7uel98e3bvioex71.png)

Thus for the period of the satellite to be reduced to one-half of its initial value its radius should be reduced to 0.63 times its initial velue.

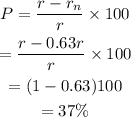

To calculate the percentage decrease of the radius,

Therefore to reduce the orbital period of a satellite by a factor of one-half, its radius should be decreased by 37%.