The scientific notation 10ˣ is a way to summarize large numbers. The exponent indicates the number of places "behind" the decimal dot.

The count starts after the first number of the value, always.

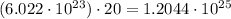

So first multiply 6.022 * 20 without taking the notation into consideration.

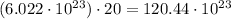

Now you have to correct the notation, remember that you have to place the decimal dot after the fisrt integer of the value.

For 120.44*10²³ you have to shift the dot 2 places to the right:

1.2044

These two places you shifted to the left are now behind the decimal dot, which menas you have to add them to the exponent in the scientific notation:

10²³⁺²=10²⁵

So the final result is