To solve the problem it is necessary to use the concepts related to the coefficient of performance and the concept of the carnot cycle.

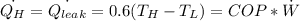

According to the statement so that the house has 20 ° C the heat pump must probe 60% of the temperature, that is

Where,

COP =Coefficient of Performance

W = Work

Q = Heat Exchange

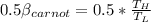

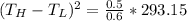

Therefore we have that

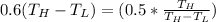

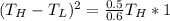

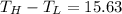

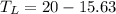

Then substituting into the first equation to get

Therefore the minimum outside temperature for which the heat pump is a sufficient heat source is 4.4°C