Step-by-step explanation:

It is given that,

Initial speed of the ball, u = 4.05 m/s

The roof is pitched at an angle of 40 degrees below the horizontal

Height of the edge above the ground, h = 4.9 m

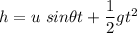

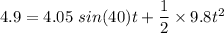

Let for t time the baseball spends in the air. It can be solved using the second equation of motion as :

On solving the above equation, we get, t = 0.769 seconds

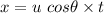

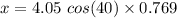

Let x is the horizontal distance from the roof edge to the point where the baseball lands on the ground. It can be calculated as :

x = 2.38 meters

Hence, this is the required solution.