Explanation :

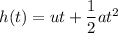

When a ball is tossed from an upper storey window off a building, the height of the object as a function of time is given by :

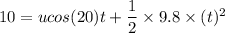

Here, u = 8 m/s

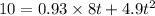

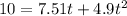

The ball strikes at an angle of 20 degrees below the horizontal. We need to find the time taken by the ball to reach a point 10 meters below the level of launching such that, h (t) = 10 m and a = g = 9.8

After solving the above equation, t = 0.855 seconds

So, the ball will take 0.855 seconds to reach a point 10 m below the level of launching. Hence, this is the required solution.