Answer:

0.00583 seconds

Step-by-step explanation:

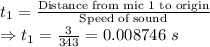

Distance from mic 1 to origin = 3 m

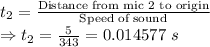

Distance from mic 2 to origin = 5 m

Speed of sound = 343 m/s

Time taken by mic 1

Time difference = t₂ - t₁ = 0.14577-0.008746 = 0.00583 s

∴ Difference in time taken by the speaker is 0.00583 s