Answer:

a)

b)

Explanation:

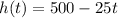

a)

Let

= height above ground

= height above ground

Let

= time

= time

Because the bird descended down to 250 feet in 10 seconds with a steady rate, that means it descends 25 feet per second.

∴

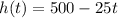

b)

To find the time it takes until the bird reaches the ground,

must equal

must equal

:

:

∴ It takes 20 seconds for the bird to reach the ground.