Answer:

The total distance he drove is 350 miles, with an average speed of 46.67 miles/hour.

Explanation:

Let

and

and

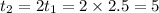

be the time of driving at a slower rate and at a faster rate respectively.

be the time of driving at a slower rate and at a faster rate respectively.

Given that the total distance = d

The speed for the first 150 miles = 60 miles/hour.

So,

[ as distance = speed x time]

[ as distance = speed x time]

hours.

hours.

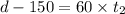

The remaining distance

miles.

miles.

Speed for the remaining distance = 60 miles/hour.

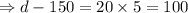

As the time he spent driving at the faster speed was twice the time he spent driving at the slower speed,

So, the time of driving at a faster rate,

hours

hours

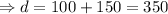

So,

[ as distance = speed x time]

[ as distance = speed x time]

miles.

miles.

The average speed of the journey = (Total distance)/(Total time taken )

=350/(2.5+5)

=350/7.5

=46.67 miles/hour.

Hence, the total distance he drove is 350 miles, with an average speed of 46.67 miles/hour.