Given :

Initial velocity, u = 12.5 m/s.

Height of camera, h = 64.3 m.

Acceleration due to gravity, g = 9.8 m/s².

To Find :

How long does it take the camera to reach the ground.

Solution :

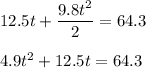

By equation of motion :

Putting all given values, we get :

t = 2.56 and t = −5.116.

Since, time cannot be negative.

t = 2.56 s.

Therefore, time taken is 2.56 s.

Hence, this is the required solution.