Answer:

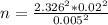

The sample size is

Explanation:

From the question we are told that

The standard deviation is

The precision is

The confidence level is

98%

98%

Generally the sample size is mathematically represented as

Where

is the level of significance which is mathematically evaluated as

is the level of significance which is mathematically evaluated as

%

%

and

is the critical value of

is the critical value of

which is obtained from the normal distribution table as 2.326

which is obtained from the normal distribution table as 2.326

substituting values