Answer:

Explanation:

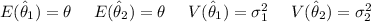

Given that:

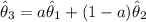

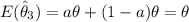

If we are to consider the estimator

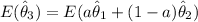

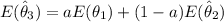

a. Then, for

to be an unbiased estimator ; Then:

to be an unbiased estimator ; Then:

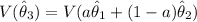

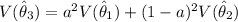

b) If

are independent

are independent

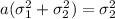

Thus; in order to minimize the variance of

; then constant a can be determined as :

; then constant a can be determined as :

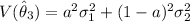

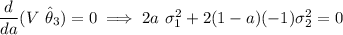

Using differentiation:

⇒

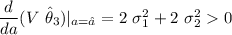

This implies that

So,

is minimum when

is minimum when

As such;

if

if