Answer:

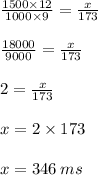

The algorithm takes 346 ms to run on an input array with 1500 rows and 4096 columns.

Step-by-step explanation:

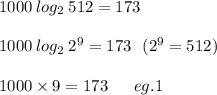

For an input array with 1000 rows and 512 columns, the algorithm takes 173 ms.

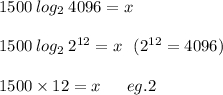

We want to find out how long will the algorithm take to run on an input array with 1500 rows and 4096 columns?

Let the algorithm take x ms to run 1500 rows and 4096 columns.

For an input of n rows and m columns, it takes

So,

and

Now divide the eq. 2 by eq. 1 to find the value of x

Therefore, the algorithm takes 346 ms to run on an input array with 1500 rows and 4096 columns.