Answer:

0.118 seconds

Explanation:

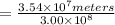

Data are given in the question

Radio travels meters per second = 3.00 × 10^8

Distance = 3.54 × 10^7 meters

Based on the given information, the number of seconds required to takes radio signal from the satellite to the surface of the earth is

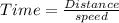

As we know that

So,

= 0.118 seconds

We simply applied the general formula to determine the number of seconds taken