Answer:

5 minutes

Explanation:

To find the number of minutes it takes Jason to run a mile, we have to first find the rate at which he runs.

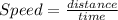

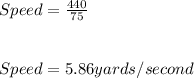

This is called Jason's speed. It is given as

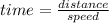

From the formula of speed, time is given as:

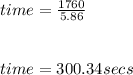

Time taken for Jason to run 1 mile (1760 yards) will therefore be:

Converting this time to minutes yields:

60 seconds = 1 minute

300.34 equals:

It takes Jason 5 minutes to run 1 mile.