Answer:

h=1 df/dx=-15

h=0.1 df/dx=-10.5

h=0.01 df/dx=-10.05

h=0.001 df/dx=-10.005

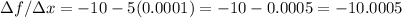

h=0.0001 df/dx=-10.0005

Explanation:

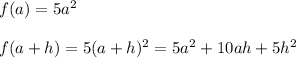

The function should be 5x^2.

If the function is linear, the answer is very simple: it is 5 for every value of h.

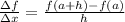

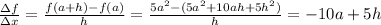

The rate of change can be defined as:

For this function f=5x we have:

Then, we have:

The value for a is a=1

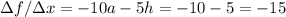

For h=1

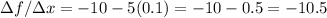

For h=0.1

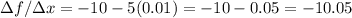

For h=0.01

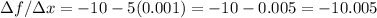

For h=0.001

For h=0.0001