COMPLETE QUESTION:

When the magnetic flux through a single loop of wire increases by 30 Tm^2 , an average current of 40 A is induced in the wire. Assuming that the wire has a resistance of 2.5 ohms , (a) over what period of time did the flux increase? (b) If the current had been only 20 A, how long would the flux increase have taken?

Answer:

(a). The time period is 0.3s.

(b). The time period is 0.6s.

Step-by-step explanation:

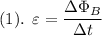

Faraday's law says that for one loop of wire the emf

is

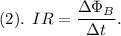

is

and since from Ohm's law

,

,

then equation (1) becomes

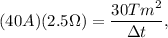

(a).

We are told that the change in magnetic flux is

, the current induced is

, the current induced is

, and the resistance of the wire is

, and the resistance of the wire is

; therefore, equation (2) gives

; therefore, equation (2) gives

which we solve for

to get:

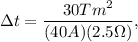

to get:

,

,

which is the period of time over which the magnetic flux increased.

(b).

Now, if the current had been

, then equation (2) would give

, then equation (2) would give

which is a longer time interval than what we got in part a, which is understandable because in part a the rate of change of flux

is greater than in part b, and therefore , the current in (a) is greater than in (b).

is greater than in part b, and therefore , the current in (a) is greater than in (b).