Answer:

85.2 feet

Explanation:

Let the distance be 'd' and time be 't'.

Given:

Distance varies directly as the square of the time the object is in motion.

So,

Where, 'k' is constant of proportionality.

Now, also given:

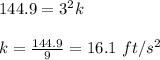

When d = 144.9 ft, t = 3 s

Plug in these value in the above equation to find 'k'. This gives,

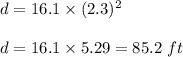

Now, we need to find 'd' when time 't' equals 2.3 s.

So, plug in the given values in the above equation to get the value of 'd'. This gives,

Therefore, the distance traveled by the object to reach base is nothing but the height of cliff. So, height of cliff is 85.2 ft.