Answer:

linear: 5s

quadratic: 50s

log-linear: 0.75 s

cubic: 500s

Explanation:

Let

be the running time associated with the input of sizes

be the running time associated with the input of sizes

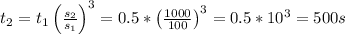

If the running time is linear

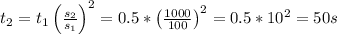

If the running time is quadratic

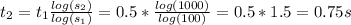

If the running time is log-linear

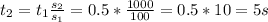

If the running time is cubic: