Answer:

Approximately

points.

points.

Explanation:

Let random variables

denote the score of those

denote the score of those

test-takers. These random variables are independently and identically distributed. In other words, the scores follow the same distribution and are independent from one other.

test-takers. These random variables are independently and identically distributed. In other words, the scores follow the same distribution and are independent from one other.

While the exact distribution of each score is unknown, the mean and variance of this distribution are given:

and

and

.

.

By the Central Limit Theorem, the mean (average)

of a sufficiently large number of such observations would follow a normal distribution. The mean of that distribution would be

of a sufficiently large number of such observations would follow a normal distribution. The mean of that distribution would be

(same as the mean of the observations) while the variance

(same as the mean of the observations) while the variance

would be

would be

.

.

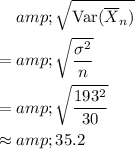

Take the square root of variance to find the value of the corresponding standard deviation:

.

.