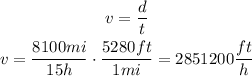

We can calculate the average speed as the quotient between the distance and the time it took to cover that distance.

In this case, the distance is 8100 miles and the time is 15 hours.

We have to express the speed in feet per hour.

We will use the equivalency 1 mile = 5280 feet. To convert the units we will multiply the result by (5280 ft / 1 mile), which has a value of 1 and let us change the units without changing the value.

Then, we can calculate the average speed as:

Answer: the average speed was 2,851,200 feet per hour.