Given:

Distance covered, d = 100 yards

Time, t = 9.0 seconds

Let's find the average speed in miles per hour.

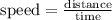

To find the average speed, apply the formula:

Since, we are to find the average speed in miles per hour, we are to convert the distance from yards to miles and time from seconds to hours.

We have:

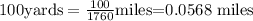

• Distance.

Where:

1 mile = 1760 yards

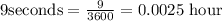

• Time.

Where:

1 hour = 3600 seconds

The distance in miles is 0.0568 miles.

The time in hours is 0.0025 hour.

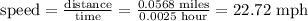

Hence, to find the average speed we have:

Therefore, the average speed in miles per hour is 22.72 miles per hour.

ANSWER:

22.72 mph