Answer: It would take 0.46 second to travel from the pitcher's mound to home plate.

Explanation:

Since we have given that

Speed at which baseball pitchers can throw the ball = 90 miles per hour

Distance covered = 60 feet 6 inches

As we know that

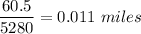

1 mile = 5280 feet

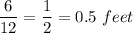

and 1 foot = 12 inches

6 inches =

So, total feet would be 60 feet +0.5 feet = 60.5 feet

Now,

1 feet =

So, 60.5 feet =

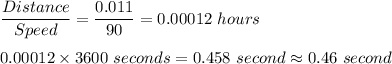

so, Time taken by pitch to travel from the pitcher's mound to home plate is given by

Hence, it would take 0.46 second to travel from the pitcher's mound to home plate.