It can't be that

makes the series converge, because this would introduce a zero in the denominator when

. For a similar reason,

would involve an indeterminate term of

.

That leaves checking what happens when

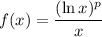

. First, consider the function

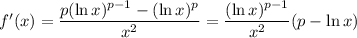

and its derivative

has critical points at

and

. (These never coincide because we're assuming

, so it's always the case that

.) Between these two points, say at

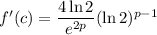

, you have

, which is positive regardless of the value of

. This means

is increasing on the interval

.

Meanwhile, if

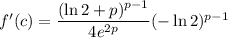

- and let's take

as an example - we have

, which is negative for all

. This means

is decreasing for all

, which shows that

is a decreasing sequence for all

, where

is any sufficiently large number that depends on

.

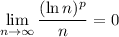

Now, it's also the case that for

(and in fact all

),

So you have a series of a sequence that in absolute value is decreasing and converging to 0. The alternating series then says that the series must converge for all

.

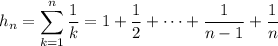

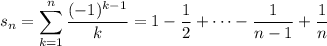

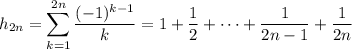

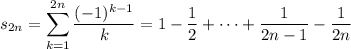

For the second question, recall that

(note that the above is true for even

only - it wouldn't be too difficult to change things around if

is odd)

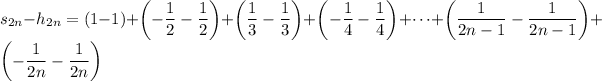

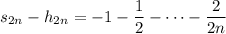

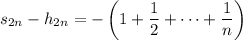

It follows that

Subtracting

from

, you have

as required. Notice that assuming

is odd doesn't change the result; the last term in

ends up canceling with the corresponding term in

regardless of the parity of

.