Answer:

Option 3

The time taken for a radio signal to travel from a satellite to the surface of the Earth is

Explanation:

Given : Radio signals travel at a rate of

meters per second. The satellite is orbiting at a height of

meters per second. The satellite is orbiting at a height of

.

.

To find : How many seconds will it take for a radio signal to travel from a satellite to the surface of the Earth?

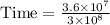

Solution :

The speed of radio signal is

meters per second.

meters per second.

The distance or height of satellite orbiting is

.

.

Substitute the value in the formula,

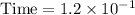

Therefore, Option 3 is correct.

The time taken for a radio signal to travel from a satellite to the surface of the Earth is