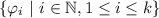

Let

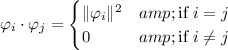

be a set of orthogonal vectors. By definition of orthogonality, any pairwise dot product between distinct vectors must be zero, i.e.

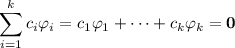

Suppose there is some linear combination of the

such that it's equivalent to the zero vector. In other words, assume they are linearly dependent and that there exist

(not all zero) such that

(This is our hypothesis)

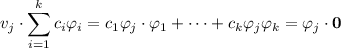

Take the dot product of both sides with any vector from the set:

By orthogonality of the vectors, this reduces to

Since none of the

are zero vectors (presumably), this means

. This is true for all

, which means only

will allow such a linear combination to be equivalent to the zero vector, which contradicts the hypothesis and hence the set of vectors must be linearly independent.