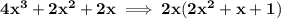

so in short, the only factoring doable to it, without any complex factors, is that of taking the common factor of 2x.

now, the trinomial of 2x²+x+1, will not give us any "real roots", just complex or "imaginary" ones.

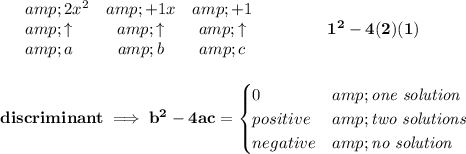

if you have already covered the quadratic formula, you could test with that, or you can also check that trinomial's discriminant, and notice that it will give you a negative value.