Answer:

1.4 hours was in the city.

Explanation:

Let

be the amount of time the truck drove on the highway, and

be the amount of time the truck drove on the highway, and

be the amount of time it drove in the city.

be the amount of time it drove in the city.

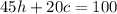

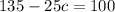

Since the total distance the truck traveled was 100 miles, we have

this says the distances the truck traveled on the highway plus the distance it traveled in the city is 100 miles.

this says the distances the truck traveled on the highway plus the distance it traveled in the city is 100 miles.

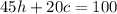

And since the truck traveled for a total of 3 hours, we have:

Thus we have two equations and two unknowns:

(1).

(2).

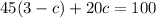

We solve this system by first solving for

from equation (2) and substituting it into equation (1):

from equation (2) and substituting it into equation (1):

the time in the city was 1.4 hours.

And the time on the highway was from equation (2)