There are several assumptions that we must do in order to answer this question.

First of all, we must assume that both functions grow to infinity. In fact, if

and

and

with

with

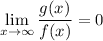

, you simply have

, you simply have

Secondly, we must assume that

grows asymptotically slower than

grows asymptotically slower than

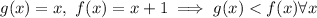

. Otherwise, you might choose

. Otherwise, you might choose

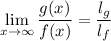

but you would have

If instead

is asymptotically slower than

is asymptotically slower than

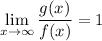

, by definition of being asymptotically slower you have

, by definition of being asymptotically slower you have