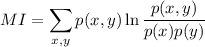

The mutual information

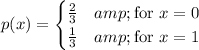

First compute the marginal distributions for

and

.

has the same marginal distribution (replace

with

above).

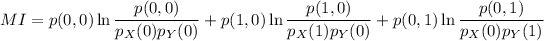

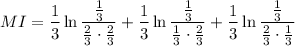

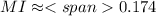

The support for the joint PMF are the points (0,0), (1,0), and (0,1), so this is what you sum over. We get

Be sure to check how mutual information is defined in your text/notes. I used the natural logarithm above.