Answer:

Explanation:

Given:

The speed of the radio is

meters per second.

meters per second.

The distance of the satellite from the earth is

meter

meter

The formula of the speed is

For time the formula is.

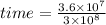

put distance and speed value in above equation.

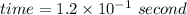

Therefore, the radio will be take the time is

or 0.12 seconds.

or 0.12 seconds.