Answer:

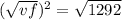

Data:-vi=30m/s t=? vf=? , h=20m first we have to find vf then time applying third eq of motion 2gs=vf²-vi² , vf²=2gs+vi² , vf²=2×9.8×20+(30)² , vf²=1292 apply sq root on both side

vf=35.94 now we have to find a time so applying 1st eq of motion vf=vi+gt so for t=vf-vi/g , t=35.94-30/9.8 so we got t= 0.60612