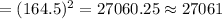

Answer: 27061

Explanation:

Given : The scale readings are Normally distributed with unknown mean, µ, and standard deviation

g.

g.

Confidence interval = 90%

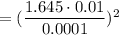

We know that the critical value for 90% confidence interval = z*=1.645 (by z-table)

Margin of error E= ± 0.0001

Now, Required minimum size : n=

Hence, n= 27061.