Answer:

Explanation:

We are given the following in the question:

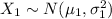

is a random normal variable with mean and variance

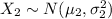

is a random normal variable with mean and variance

is a random normal variable with mean and variance

is a random normal variable with mean and variance

are independent events.

are independent events.

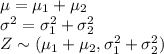

Let

Then, Z will have a normal distribution with mean equal to the sum of the two means and its variance equal the sum of the two variances.

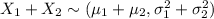

Thus, we can write: