To solve the problem it is necessary to apply the concepts related to Kepler's third law as well as the calculation of distances in orbits with eccentricities.

Kepler's third law tells us that

Where

T= Period

G= Gravitational constant

M = Mass of the sun

a= The semimajor axis of the comet's orbit

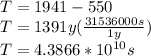

The period in years would be given by

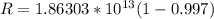

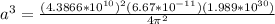

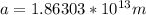

PART A) Replacing the values to find a, we have

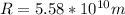

Therefore the semimajor axis is

PART B) If the semi-major axis a and the eccentricity e of an orbit are known, then the periapsis and apoapsis distances can be calculated by