Answer:

8 seconds, Answer choice C.

Step-by-step explanation:

The information they give us about the speed of the cat, is from the point at which the dog started chasing it (that velocity being 10 m/s).

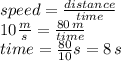

Notice that the actual distance the cat run is: 100 meters minus 20 meters (100 - 20 = 80 meters). Therefore, we have information on the distance covered by the cat (80 meters), and its speed (10 m/s), so we can use the definition of speed to find the time it took the cat to get to the tree:

Since all units for the physical quantities involved were given in the SI system, the answer comes also in the SI units of time: "seconds"