Answer:

Step-by-step explanation:

The force of gravity between the Earth, of mass

and a rock of mass m orbiting at a distance that would be the radius of the Earth R=6371000m (1.5m is insignificant) would be:

and a rock of mass m orbiting at a distance that would be the radius of the Earth R=6371000m (1.5m is insignificant) would be:

Where

is the gravitational constant.

is the gravitational constant.

Under this force, the rock experiments a (centripetal) acceleration given by:

Putting all together:

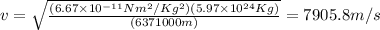

Which for our values is:

And since the circumference of the orbit would be

, the time taken to travel it would be:

, the time taken to travel it would be: