Answer:

Step-by-step explanation:

Total =40 miles.

y miles at x miles/hr.

40- y miles at 1.25 x miles/hr.\

We know that

Distance = time x velocity

For y miles

Lets time taken to cover y miles is t

y = x t

t=y/x -----------1

For 40- y

Lets time taken to cover 40- y is t'

40- y = 1.25 x t'

t'=(40-y)/1.25x -----------2

By adding both equation

t+t'=y/x + (40-y)/1.25x

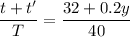

t+t'=(32+0.2y)/x

Now lets time T taken by Marla if she will travel at x miles per hr for entire trip.

40 = x T

T=40/x

So

To find numerical value of above expression we have to know the value of y.