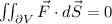

Answer:

The answer is zero, because the divergence of the field is zero.

Explanation:

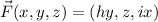

The vector field we have to integrate is:

Where (I assume) h and i are just constants.

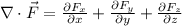

To use the divergence theorem, we first have to know what the divergence is.

The dvergence of a vector field is denoted:

And it is equal to:

Where

are the components of the vector field in the x, y and z direction respectively.

are the components of the vector field in the x, y and z direction respectively.

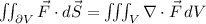

Now, what does the Divergence Theorem (or Gauss-Ostrogradsky Theorem) say?

It states that:

If F is a continiously differentiable vector field.

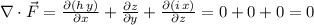

But, if we calculate the divergence of this vector field, we get zero! Let's check that.

Now, if we insert this into the statement of the Divergence Theorem, we get that the surface integral is equal to the triple integral of zero over the interior of the surface. That integral is obviously zero, thus we have: