Answer:

The required sample size is n=8,660.

Explanation:

We have to calculate the minimum sample size that will give us a maximum margin of error of 500 miles, with a 90% confidence.

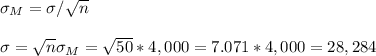

A pilot sample of n=50 give a sample standard deviation of 4,000 miles.

With the pilot sample we can calculate the population standard deviation as:

The equation for the margin of error is:

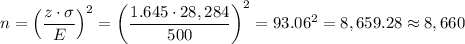

The z-value for a 90% confidence interval is z=1.645.

Then, we can estimate the sample size as: