Answer:

Step-by-step explanation:

given data:

wavelength of emission =

output power = 100 mW

We can deduce value of obsorption coefficient from the graph obsorption coefficient vs wavelength

for wavelength

the obsorption coefficient value is 10^{3}

the obsorption coefficient value is 10^{3}

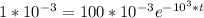

intensity can be expressed as a function of thickness as following:

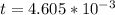

putting all value to get thickness