Answer:

Explanation:

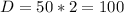

At a speed of 50m/h time taken is 2hours

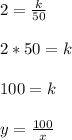

At a speed of 45m/h time take is 2.5 hours

You can find the distance between A and B

Distance=speed × time

But time y varies inversely with speed x , hence

where k is a constant of proportionality and D is distance.

To find k

where y is time and x is speed

Given

x=45m/h you should find time y. Apply the expression above