Answer:

0.733 m

Step-by-step explanation:

The maximum safe intensity for human exposure is

Intensity is defined as the ratio between the power P and the surface irradiated A:

For a source emitting uniformly in all directions, the area is the surface of a sphere of radius r:

So

In this case, we have a radar unit with a power of

P = 10.0 W

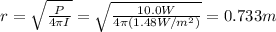

So we can solve the previous equation to find r, which is the distance at which a person could be considered to be safe: