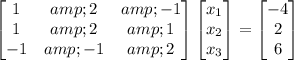

In matrix form, the system is

Solving this "using matrices" is a bit ambiguous but brings to mind two standard methods.

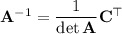

Compute the inverse of the coefficient matrix using the formula

where

is the coefficient matrix,

is the coefficient matrix,

is its determinant,

is its determinant,

is the cofactor matrix, and

is the cofactor matrix, and

denotes the matrix transpose.

denotes the matrix transpose.

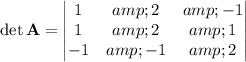

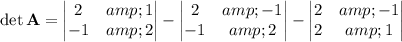

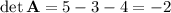

We compute the determinant by a Laplace expansion along the first column:

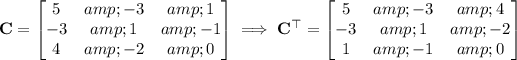

The cofactor matrix is

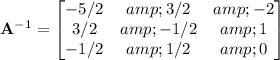

which makes the inverse

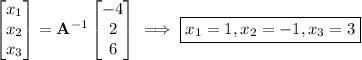

Finally,

- Gauss-Jordan elimination:

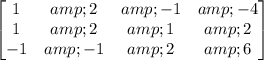

Take the augmented matrix

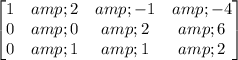

Subtract row 1 from row 2, and -(row 1) from row 3:

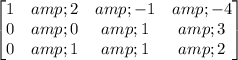

Multiply row 2 by 1/2:

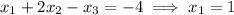

The second row tells us that

Then in the third row,

Then in the first row,