Answer:

The hawk reaches the rabbit at t=4.3 sec

Explanation:

Let

h ----> is the height in feet

t ----> is the time in seconds

v ---> the initial velocity in feet pr second

s ----- is the starting height

we have

when the hawk reaches the rabbit the value of h is equal to zero

we have

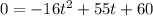

substitute

Solve the quadratic equation by graphing

The solution is t=4.3 sec

see the attached figure