Answer:

, 6.1 s

, 6.1 s

Step-by-step explanation:

The motion of the dropped penny is a uniformly accelerated motion, with constant acceleration

towards the ground. If the penny is dropped from a height of

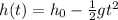

the vertical position of the penny at time t is given by the equation

where the negative sign is due to the fact that the direction of the acceleration is downward.

We want to know the time t at which the penny reaches the ground, which means h(t)=0. Substituting into the equation, it becomes

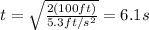

And re-arranging it, we find an expression for the time t:

And substituting the numbers, we can also find the numerical value: