Answer:

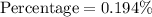

The percent error in this measurement is 0.194%.

Explanation:

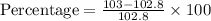

Given : A radar gun measured the speed of a baseball at 103 miles per hour. If the baseball was actually going 102.8 miles per hour.

To find : What was the percent error in this measurement?

Solution :

The actual speed is 103 miles per hour.

The estimated speed is 102.8 miles per hour.

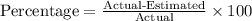

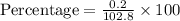

The percentage is given by,

Therefore, the percent error in this measurement is 0.194%.