Answer:

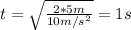

The correct option is b: 1.0 s.

Step-by-step explanation:

To find the time (t) at which the object reaches the ground we need to use the next equation:

(1)

(1)

Where:

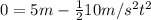

: is the final height = 0

: is the final height = 0

: is the initial height = 5 m

: is the initial height = 5 m

: is the initial speed = 0 (it falls from rest)

: is the initial speed = 0 (it falls from rest)

g: is the gravity = 10 m/s²

By entering the above values into equation (1) we have:

Therefore, the correct option is b: 1.0 s.

I hope it helps you!