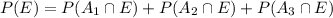

a. By the law of total probability,

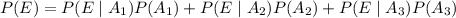

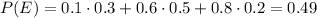

and using the definition of conditional probability we can expand the probabilities of intersection as

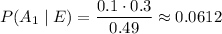

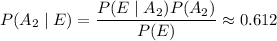

b. Using Bayes' theorem (or just the definition of conditional probability), we have

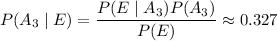

c. Same reasoning as in (b):

d. Same as before:

(Notice how the probabilities conditioned on

add up to 1)

add up to 1)